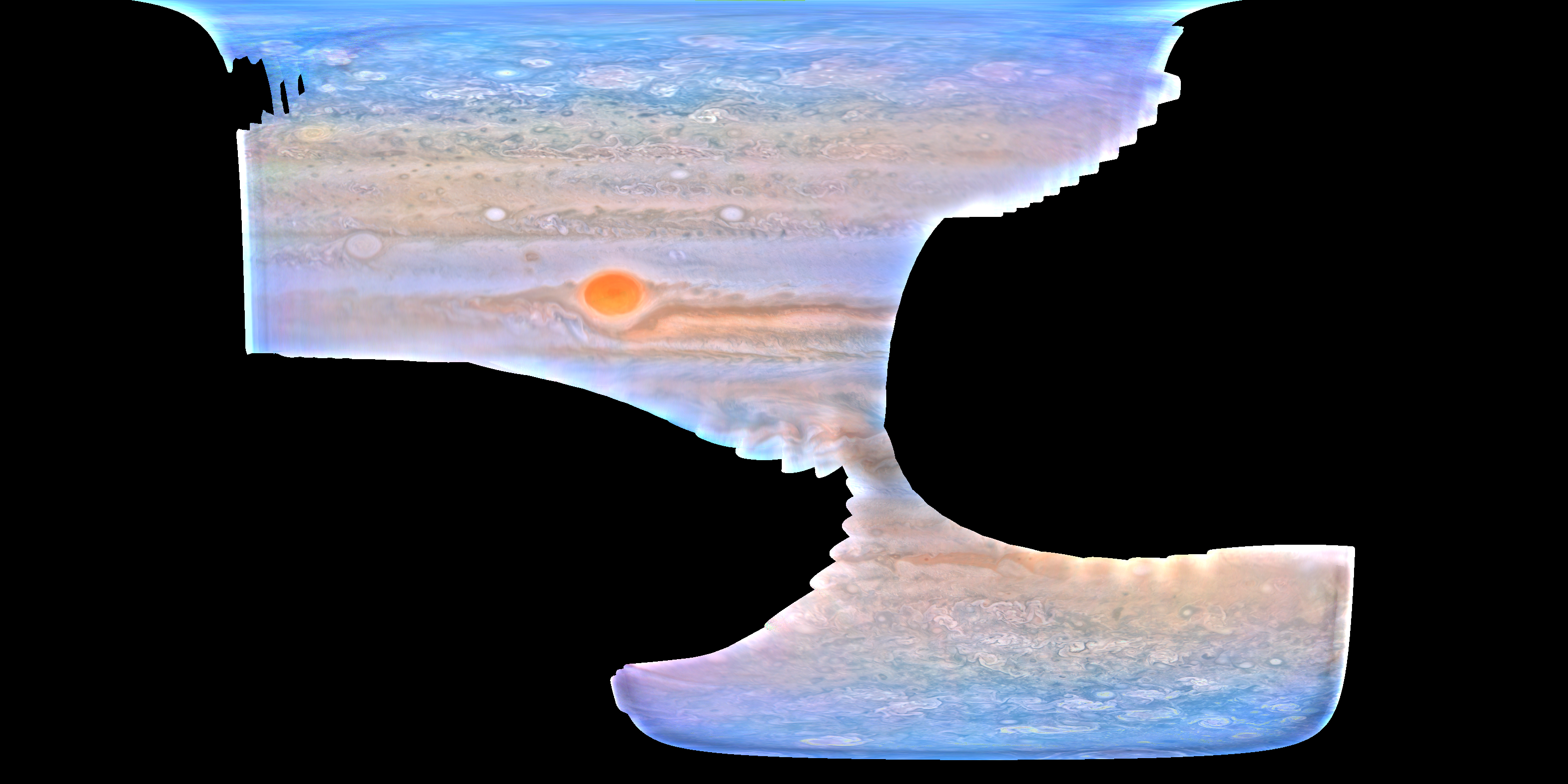

I wanted to share a code that I've been working on to project and mosaic JunoCam images. The code is written in Python and uses the SPICE library: https://github.com/ramanakumars/JunoCamProjection. I thought people in this forum might be interested in using it or comparing with existing pipelines. The goal of this code is to generate mosaics by stacking multiple images for a given perijove pass with little manual labor. An example of both the projection and the mosaicing process is in the examples folder. Here is an example of a mosaic from PJ27 data:

Ramana